Parallel Computation

(Preliminary Modern Computation)

Introduction

Parallel computing is a type of computation in which many calculations

or the execution of processes are carried out concurrently. Large problems can

often be divided into smaller ones, which can then be solved at the same time.

There are several different forms of parallel computing: bit-level,

instruction-level, data, and task parallelism.

Requirement

of Parallel Computation :

- Algorithm

-

Programming Language

- Compiler

Problem of computation must be

able :

- Broken into discrete pieces of work

that can be solved simultaneously

- Run

some program instructions at any time

- Solved

in a shorter time with some computing resources compared to a single computing

source

Why use parallel

computation :

- Save time

- Solve

Larger

- Provide

Concurrency

Programming

language used in parallel programming :

- MPI (Message Passing Interface)

- PVM

(Parallel Virtual Machine)

Computation

parallel divided into 6 part :

- Parallelism

Concept

- Parallelism

Concept

-

Distributed Processing

-

Architectural Parallel Computer

-

Introduction of Thread Programming

-

Introduction of Massage Passing, OpenMP

- Introduction of CUDA GPU Programming

Parallelism

Concept

The concept of Computational Parallel is a form of calculation using a

computer that can perform many tasks and simultaneously at the same time.

Distributed

Processing

Distribution Processing is working on all the data processing together

between the central computer with several smaller computers and interconnected

through communication channels.

Architectural

Parallel Computer

Parallel computing is one technique of doing computing simultaneously by

utilizing multiple computers simultaneously. Usually required when the required

capacity is very large, either because it must process large amounts of data or

because of the demands of many computing processes.

Parallel

computer architecture by Flynn’s classification :

-

SISD

- SIMD

- MISD

- MIMD

SISD

An abbreviation of Single Instruction, Single Data is the only one that

uses the Von Neumann architecture. This is because in this model only used 1

processor only.

SIMD

It stands for Single Instruction, Multiple Data. SIMD uses multiple

processors with the same instructions, but each processor processes different

data. For example we want to find the number 27 on a row of numbers consisting

of 100 numbers, and we use 5 processors.

MISD

It stands for Multiple Instruction, Single Data. MISD

uses many processors with each processor using different instructions but

processing the same data. This is the opposite of the SIMD model.

MIMD

It stands for Multiple Instruction, Multiple Data.

MIMD uses many processors with each processor having different instructions and

processing different data. However many computers that use the MIMD model also

include components for the SIMD model.

Introduction of Thread Programming

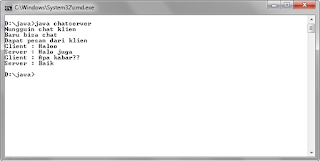

Threading / Thread is a control flow of an information

service process requested by the user. The concept of threading is to run 2

different processes or different processes at a time.

Usability

Multithreading in singleprocessor is :

- Work foreground and background at

once in one app

-

Asynchronous processing is improved

- Accelerate

program execution

- Organizing

the program for the better

Multicore Programming

Multicore systems urge programmers to overcome

challenges that include:

- Division of

activity

- Balance /

Scales

- Data that

has been destroyed

- Data

Dependency

- Testing and Debugging

Usability

Thread

Multithreading is useful for multiprocessor and

singleprocessor. The uses for multiprocessor systems are :

- As a

parallel unit or level of parrarelism granularity

- Improved performance compared to process-based

The main benefit of many threads in one process is to

maximize the degree of kongkurensi between closely related operations.

Applications are much more efficiently done as a set of threads than a bunch of

processes. Threading is

divided into 2 of them :

- Static

Threading

- Dynamic Multithreading

Static Threading

This technique is commonly used for computers with

multiprocessors chips. This technique allows the shared memory thread to be

available, using the program counter and execute the program independently. The

operating system places one thread on the processor and exchanges it with

another thread that wants to use the processor.

Dynamic Multithreading

This concurrency platform provides scheduler that load

balacing automatically. The platform is still in development but already

supports two features, namely nested parallelism and parallel loops.

Introduction of Massage Passing, OpenMP

OpenMP (Open Multi-Processing) is an

application programming interface (API) that supports multi-processing shared

memory programming in C, C ++ and Fortran on a variety of architectures,

including UNIX and Microsoft Windows platforms. OpenMP Consists of a set of

compiler commands, library routines, and environment variables that affect

run-time.

Usability in Message Passing is :

- Provides functions to exchange

messages

- Write

parallel code portable

- Gain high

performance in parallel programming

- Faced with

problems involving irregular or dynamic data relationships that do not quite

match the parallel data model

Introduction of CUDA programming GPU

CUDA

(Compute Unified Device Architecture) is a parallel computing platform and

programming model that makes the use of GPUs for general purpose computing

simple and elegant.

GPU

(Graphical Processing Unit) initially is a processor that works specifically

for rendering on graphics cards only, but along with the increasing need for

rendering, especially for near realtime processing time.